Multi-view SLAM for the control of space robots

This project aims at developing new techniques in real-time Simultaneous Localisation and Mapping (SLAM) using multi-view computer vision. This project focuses on applying these techniques to Space Exploration in collaboration with Thales Alenia Space. This will involve the study of autonomous navigation of space robots in unknown environments and safe landing. We have also participated in stage 2 of the ProVIS mars challenge.

Some videos of this work are provided below:

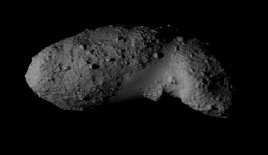

ITOKAWA Asteroid:

Video 1: With only intensity minimisation.

Video 2: With both intensity and depth minimisation.

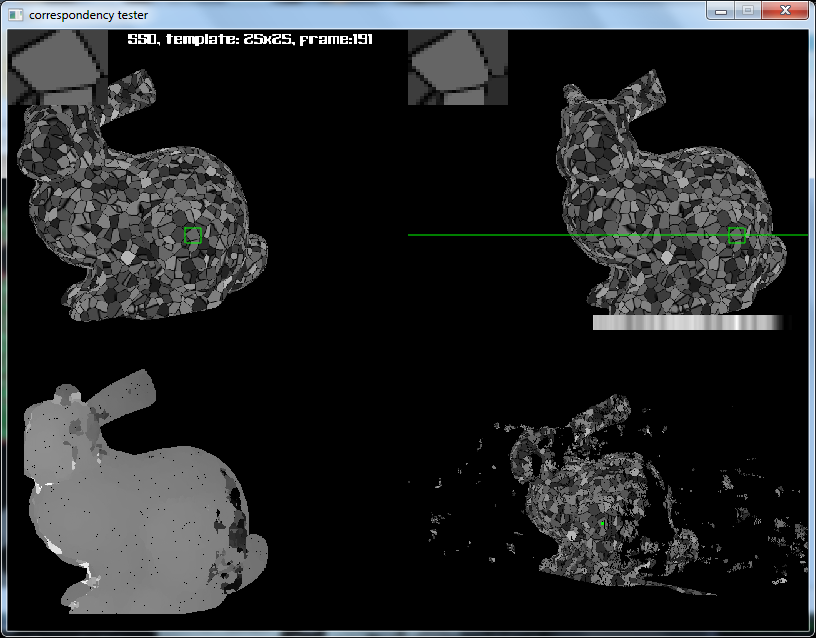

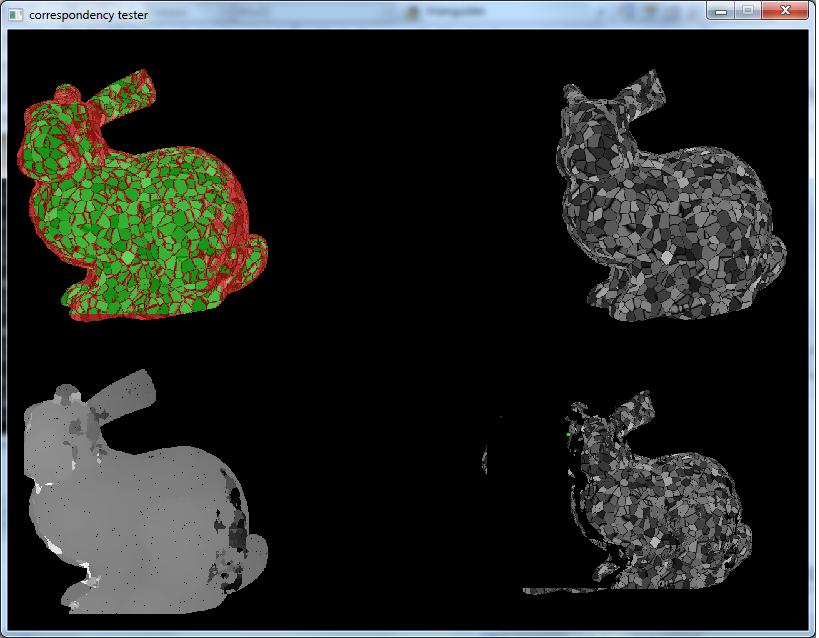

Bunny test sequence

Video: Trajectory estimation with intensity and depth minimisation

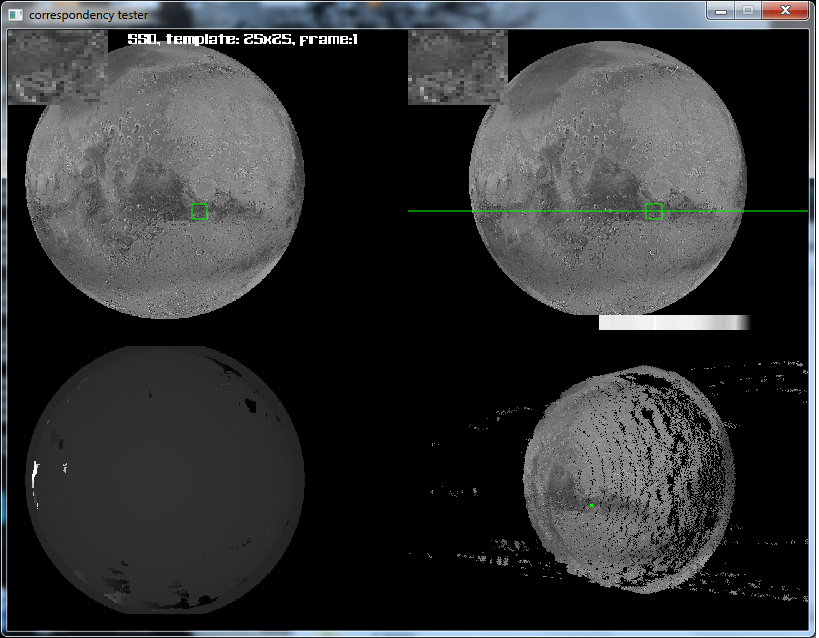

Mars sequences

The primary part of this study concerns developing a visual perception system that localises the spacecraft with respect to reference bodies and performs visual servoing to achieve environment mapping and safe landing. The interest here will be to use the theory of multi-view differential geometry to perform closed-loop visual servoing control. It will be necessary to develop an analytic relationship between the movement of the space vehicle and the movement of external bodies such as asteroids and planets perceived by the visual sensors. This will also include identifying pertinent control parameters that adhere to a rigorous set of criterion (robustness, stability, precision, efficiency) along with the choice of technical constraints such as the optimal placement of the cameras. In particular, it will be of interest to develop a global task function which allows the aircraft to behave correctly in accordance with the online dynamics (for example, the movement of an asteroid), changing illumination conditions (according to the luminosity and the visibility), perturbations (such as varying temperatures) or large differences in perceived scale of the environment.

The second part will involve developing algorithms for mapping the environment both in an off-line learning phase and an online update phase. The former case will allow to reduce the computational complexity of the online problem by performing the bulk of the computational effort off-line. This will involve creation of a navigational framework that allows the creation of image-based maps within which a robot is to execute a global task. Given a set of training measurements, task planning consists in constructing optimal representations of these maps in such a way that localisation and control of the aircraft may be perform precisely whilst remaining invariant to dynamic changes in the environment. The online map will be updated and integrated into the control objective when new and better quality measurements become available such as during a landing phase where higher resolution images are available closer to the ground.

Some associated publications are:

Tykkälä, T. M. & Comport, A. I (2011). Direct Iterative Closest Point for Real-time Visual Odometry. In The Second international Workshop on Computer Vision in Vehicle Technology: From Earth to Mars in conjunction with the International Conference on Computer Vision. Barcelona, Spain.

Tykkala, T. M. & Comport, A. I (2011). A Dense Structure Model for Image Based Stereo SLAM. In IEEE International Conference on Robotics and Automation, ICRA'11. Shanghai, China.

Tykkala, T. & Comport, A. I (2010). A Dense Structure Model for Image Based Stereo SLAM. In Journee des Jeunes Chercheurs en Robotique - Journees Nationales du GDR Robotique. Paris, France.