Spherical dense visual SLAM

Spherical dense visual SLAM

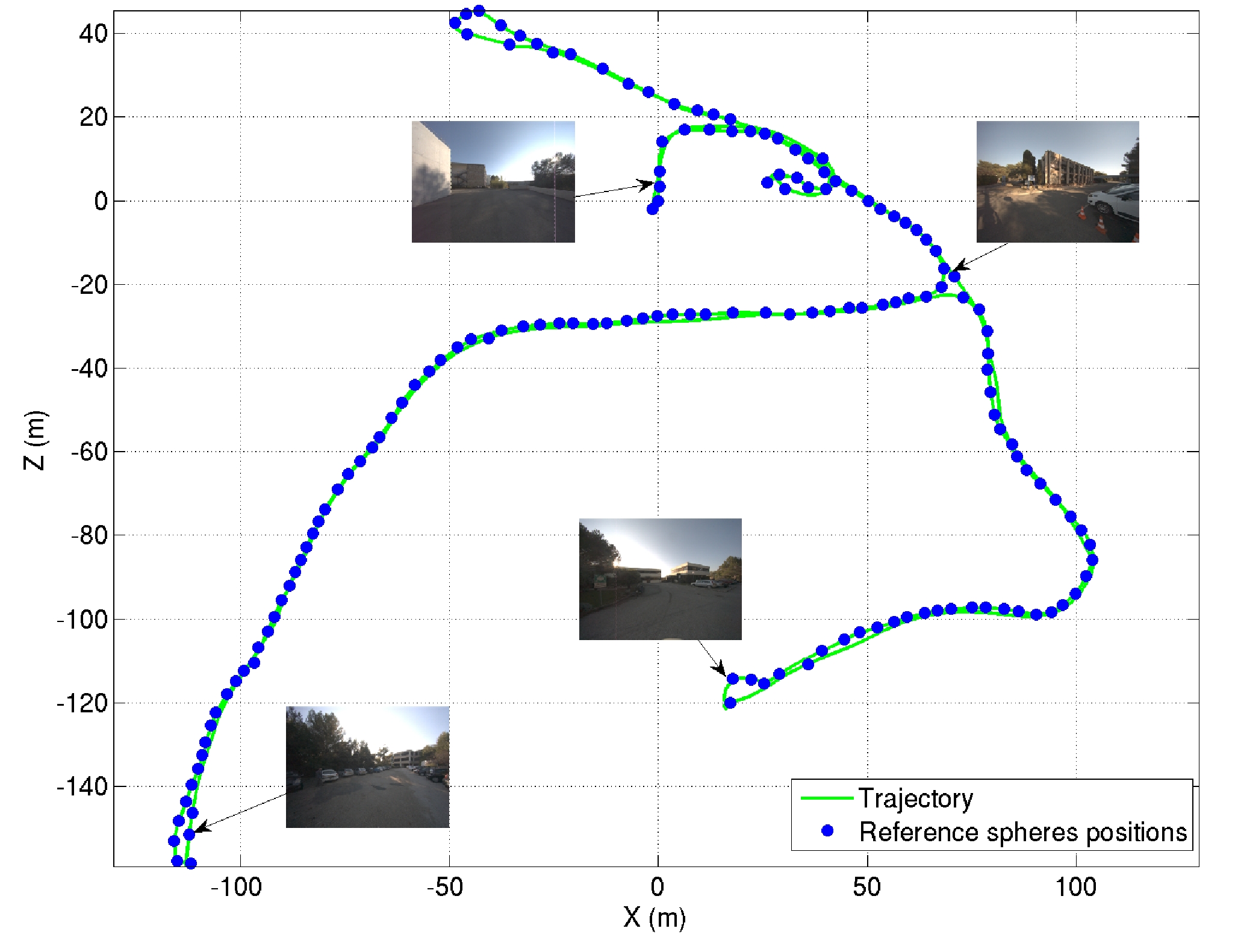

In this work we have proposed an ego-centric spherical model of the environment for autonomous navigation. The proposed approach relies on a representation of the scene based on spherical images augmented with depth information and a spherical saliency map, both constructed in a learning phase. Saliency maps are built by analyzing useful information of points which best condition spherical projections constraints in the image. This representation is then used for vision based navigation in real urban environments. During navigation, an image-based registration technique combined with robust outlier rejection is used to precisely locate the vehicle. Computational time is also improved by better representing and selecting information from the reference sphere and current image without degrading matching. It will be shown that by using this pre-learned global spherical memory no error is accumulated along the trajectory and the vehicle can be precisely located without drift.

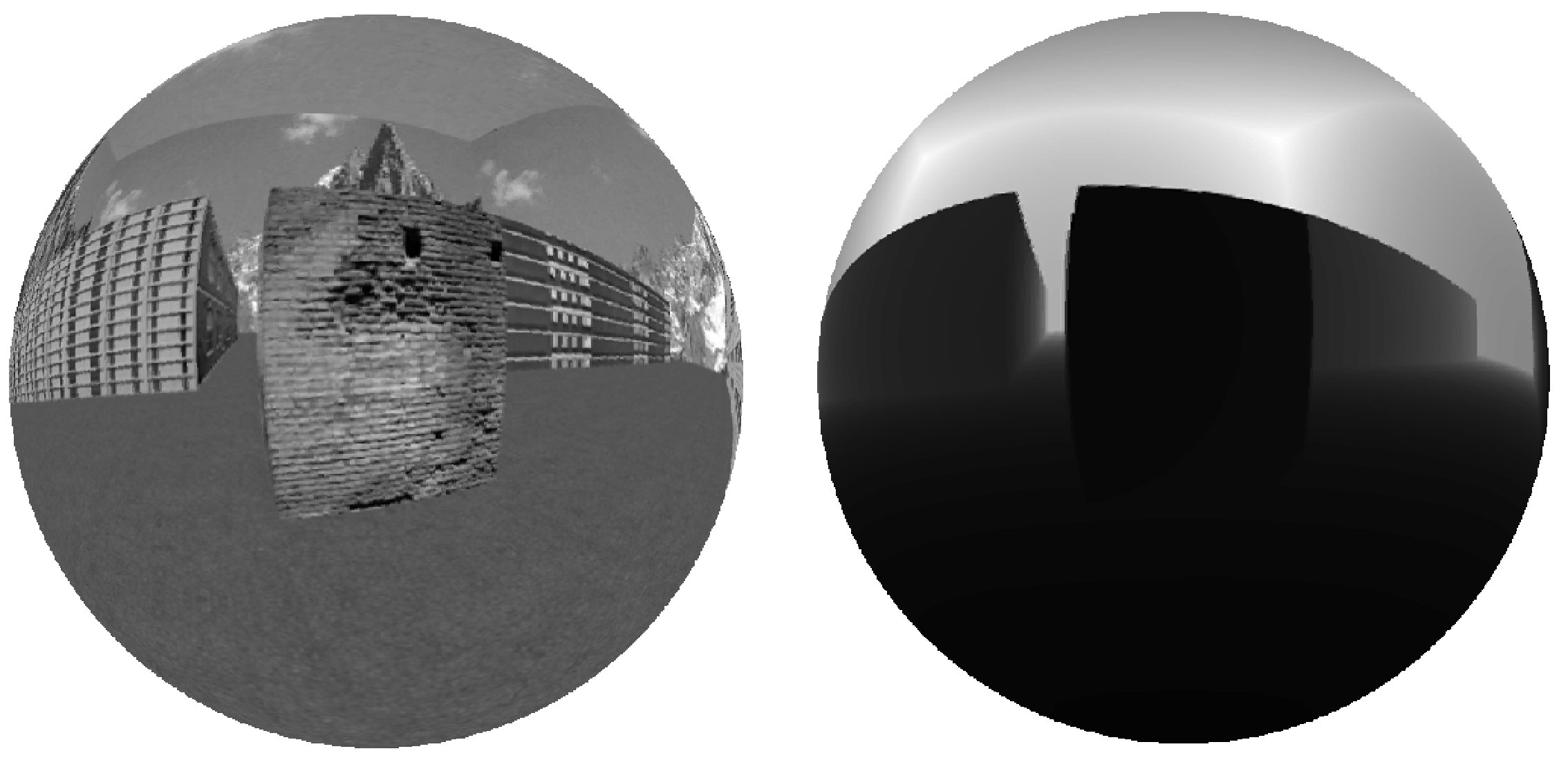

Sphere is composed of a photometric panorama and a depth sphere.

Multi-baseline stereo spherical acquisition system.

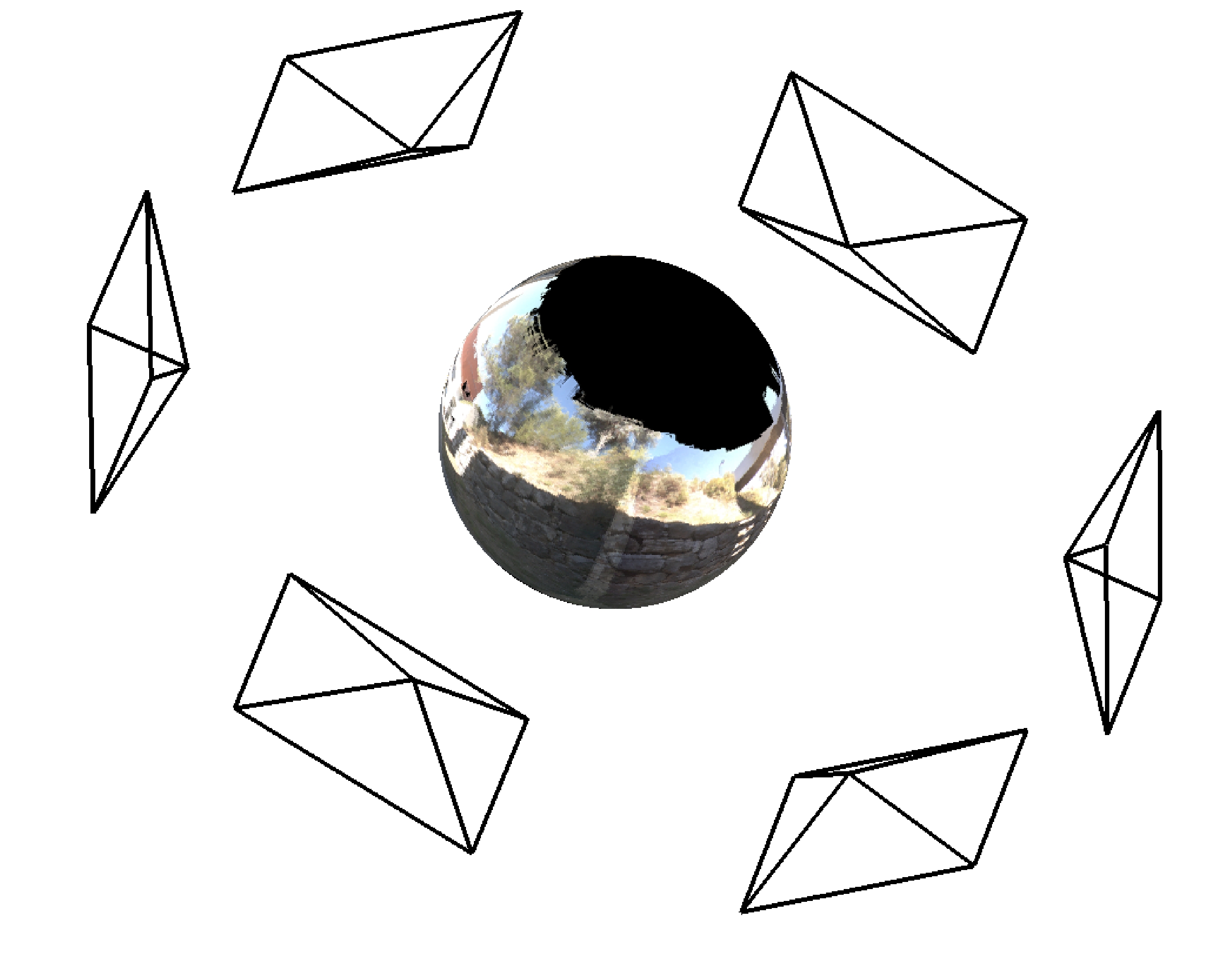

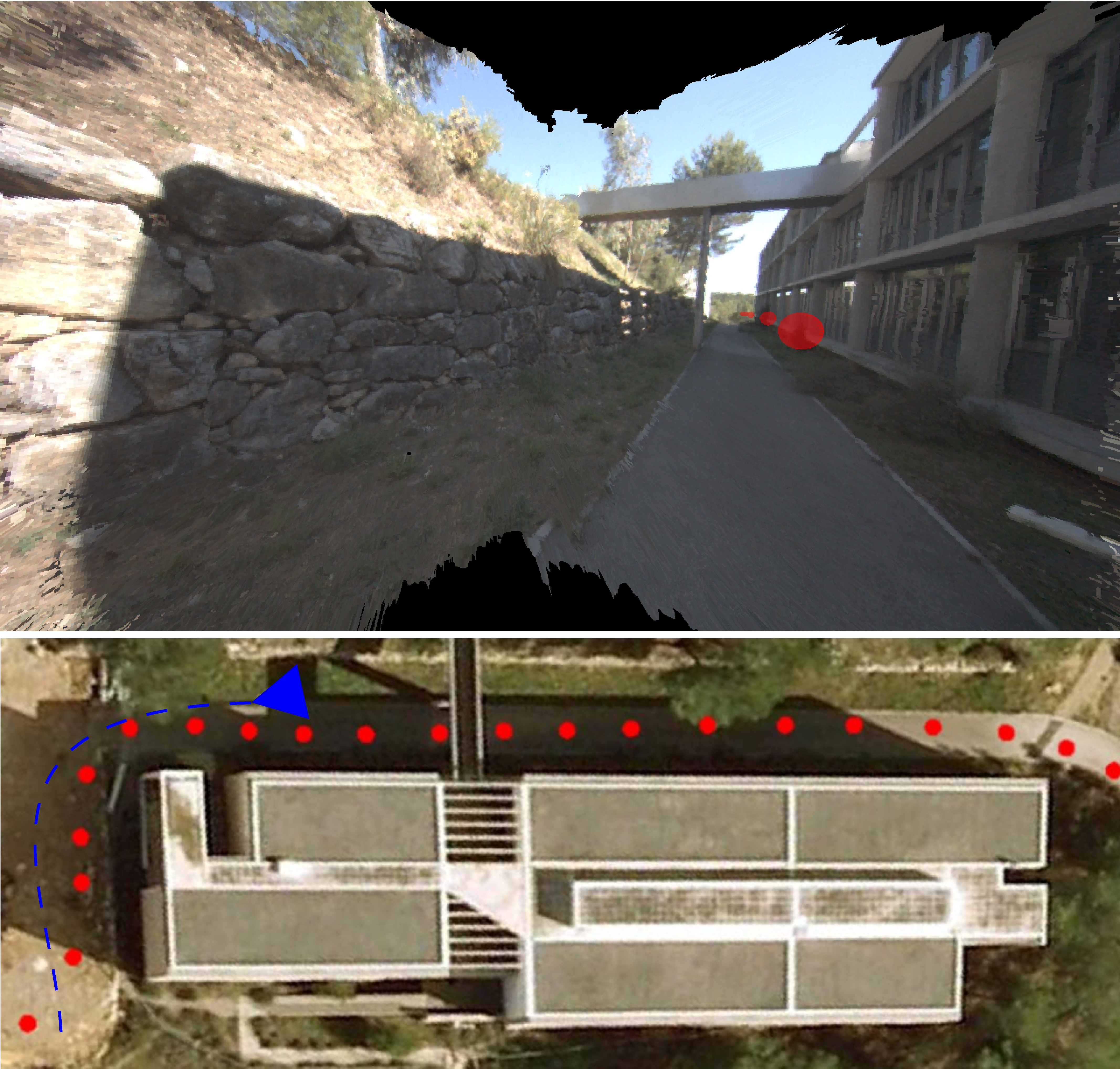

The spherical visual memory of a given trajectory.

Virtual Navigation from a graph of Sphere's

This research was carried out in collaboration with the Arobas team of INRIA Sophia-Antipolis. We presented the following papers at IROS 2010 based on spherical environment model:

Some videos illustrating the results can be found here: